Terminator Conundrum: Perhaps a Middle Ground?

HISTORY REPEATING?

History is replete with examples of governments that agreed to temporary moratoria or outright bans on technologies, but then proceeded to ignore those restrictions when circumstances changed. In 1899, attendees at the Hague Convention enacted a prohibition on discharging projectiles or explosives from balloons during war. [1] Nation states then proceeded to ignore their signatures when the use of aerial weapons was proved invaluable in World War I. [2]

In the early days of the 20th century, nations banned unrestricted submarine warfare, in which submarines sink civilian vessels such as freighters and tankers without warning. [3] Nevertheless, the Germans ignored these restrictions in World War I, as exemplified by the dramatic sinking of the RMS Lusitania by a German U-boat in 1915. [4] Such actions were condemned worldwide, especially by the United Kingdom and the United States…then Pearl Harbor occurred, and all gloves were off. The order to “EXECUTE AGAINST JAPAN UNRESTRICTED AIR AND SUBMARINE WARFARE” was issued to all US Navy ship and submarine commanders on December 7, 1941, just four and a half hours after the attack at Pearl Harbor (as noted in the excellent book, "Army of None: Autonomous Weapons and the Future of War," by Paul Scharre, [5] ) A massive US submarine campaign in the Asia-Pacific region was initiated to attack Japanese merchant vessels in defense of US troops deploying there. [6]

It is often said that history repeats itself—we seem to be seeing these same trends today with autonomous weapons. During the Obama Administration, Deputy Secretary of Defense, Robert O. Work, heavily promoted what he called the “Third Offset,” which sought to outmatch adversaries completely via advanced systems, including autonomous weapons and artificial intelligence (AI). Despite this desire for battlefield dominance, Mr. Work nevertheless emphasized that there would always be a “human in the loop,” meaning the systems would never become completely autonomous—especially to be able to make kill decisions on their own. [7] However, R&D on such intelligent systems has created significant controversy.

PUSHBACK

Talk of and investment into autonomous weapons has initiated fierce debate within defense and technology circles. What happens if the United States insists on this policy of a human in the loop, while its adversaries remove that step with fully autonomous weapons, and they consequently can act faster and with a higher kill rate on the battlefield? Moreover, are such systems error-free? Bias-free? Reliable? Given these and other technical and ethical concerns, in 2016 the Vice Chairman of the Joint Chiefs of Staff, Gen. Paul J. Selva, labeled the question of whether we should remove human oversight from any automated military operation as the Terminator Conundrum , after the Terminator movies in which robots take over the world. [8]

International organizations have also weighed-in. Since 2013, the United Nations has had an active commission focused on addressing questions regarding lethal autonomous weapons systems (LAWS). [9] Several countries have even gone so far as to support a ban on lethal autonomous weapons, although curiously the list does not include any of major military powers. “Only twenty-two nations have said they support a ban on lethal autonomous weapons: Pakistan, Ecuador, Egypt, the Holy See, Cuba, Ghana, Bolivia, Palestine, Zimbabwe, Algeria, Costa Rica, Mexico, Chile, Nicaragua, Panama, Peru, Argentina, Venezuela, Guatemala, Brazil, Iraq, and Uganda (as of November 2017). None of these states are major military powers and some, such as Costa Rica or the Holy See, lack a military entirely.” [5]

Fast forward two years, and we see the controversy heating up even more. In June 2018, more than 3,000 Google employees protested Google’s activities with the US Department of Defense (DoD) in a letter to Google’s C-suite (about a dozen employees are said to have even resigned). They demanded that Google cease engagement in the DoD’s Project Maven, which was focused initially upon using AI to improve image analysis. Google’s leadership subsequently announced it would not renew its contract with the DoD. Moreover, Google drafted guidelines for AI that exclude the development of autonomous weapons and most applications of AI that might harm people. [10] In late July 2018, 2,400 researchers in 36 countries joined 160 organizations in signing a letter, drafted by the Future of Humanity Institute at MIT, to “call upon governments and government leaders to create a future with strong international norms, regulations and laws against lethal autonomous weapons.” [11] , [12] , [13] This letter builds upon a similar letter signed in 2015. [14]

While admirable for standing up for their principles or ethics, it is doubtful that these individual protests, corporate withdrawals, or international bodies will sway governments from continuing to develop and deploy autonomous weapons. The advantages of autonomous weapons are just too enticing for some countries, especially those with different ethics and governments than Western countries:

“Robots have many battlefield advantages over traditional human-inhabited vehicles. Unshackled from the physiological limits of humans, uninhabited (“unmanned”) vehicles can be made smaller, lighter, faster, and more maneuverable. They can stay out on the battlefield far beyond the limits of human endurance, for weeks, months, or even years at a time without rest. They can take more risk, opening up tactical opportunities for dangerous or even suicidal missions without risking human lives.” [5]

Three nation states in particular currently lead efforts toward developing these systems: the United States, China and Russia.

NATION STATE INTEREST

United States. The aforementioned Project Maven is the first wave of a much larger planned AI focus within the DoD. In June 2018, the DoD initiated the Joint Artificial Intelligence Center (JAIC) , with the “overarching goal of accelerating the delivery of AI-enabled capabilities, scaling the Department-wide impact of AI, and synchronizing DoD AI activities to expand Joint Force advantages.” [15] Project Maven and other AI projects will be folded into the JAIC. The DoD requested $75 million to standup JAIC, with an anticipated need of $1.7 billion over six years. [16] Thus, regardless of Google’s and other stances against autonomous weapons, the DoD is proceeding forward with its own research and development. Potential strategic advantages for C4ISR [17] are simply too large to ignore for the world’s largest defense department. Furthermore, given the pots of money involved (“Global spending on military robotics is estimated to reach $7.5 billion per year in 2018, with scores of countries expanding their arsenals of air, ground, and maritime robots.” [5]), other companies are more than happy to fill the void that Google leaves behind to support the DoD. Meanwhile, US adversaries are doing even more.

China. Previously not engaged seriously in the AI community, China had a wake-up call in 2016 when the AI company DeepMind beat the world-champion Go player, South Korean Lee Sedol, with its algorithm AlphaGo. Long considered a difficult game to master (with essentially infinite move variations compared to the more pedestrian game of chess), Go presented programmers with a seemingly impossible task for challenging world-class human players. Then DeepMind beat Mr. Sedol in 4 games to 1, proving that AI (at least deep learning) has come of age. [18] Some see simulating the game Go as an inchoate step toward full battlefield simulations. [19]

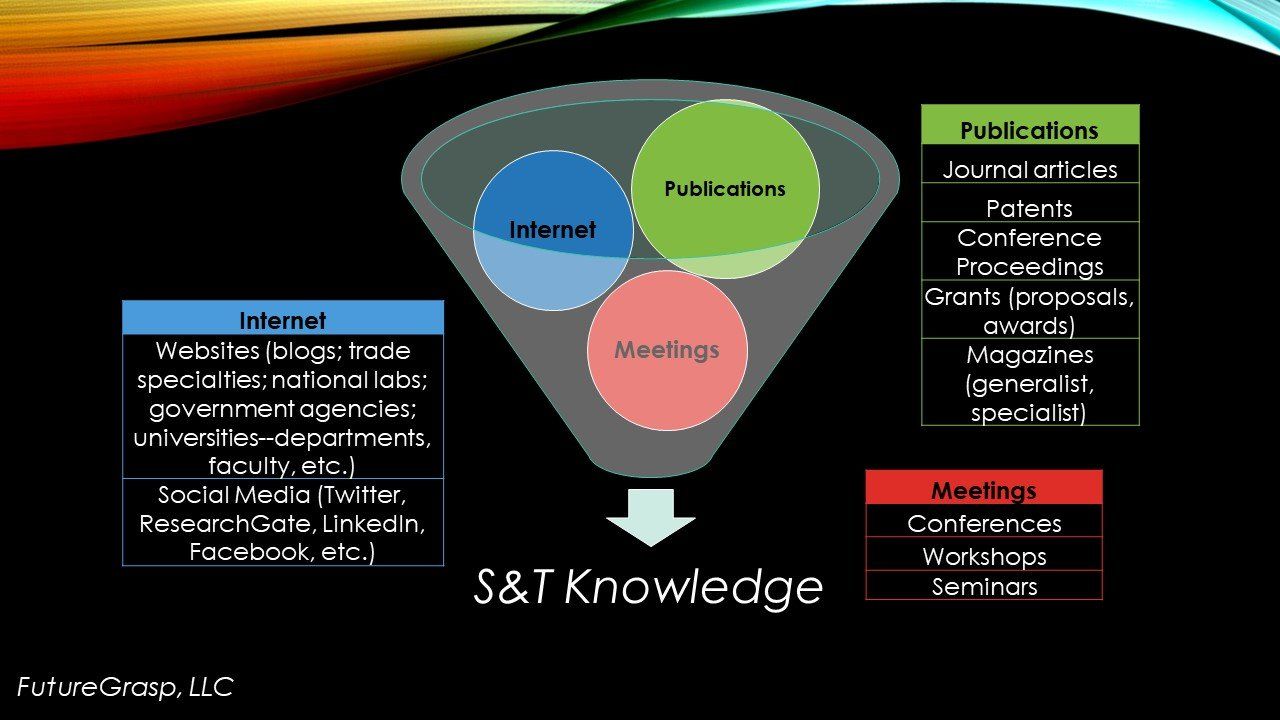

Chinese investment in AI quickly ramped-up. China announced a plan in 2017 to become the world leader in AI by 2030. [20] Its nation state investments go beyond merely algorithms: “Its investment in A.I., chips and electrics cars combined has been estimated at $300 billion.” [21] Taking a holistic view of technologies with the potential to complement and accelerate AI is critical for success in autonomous weapons – areas such as semiconductors, data storage, sensors, cloud computing, robotics, and supercomputers all affect the pace of AI development. [22]

China plans to leverage this funding and AI capabilities to create a suite of autonomous weapons. For example, Chinese industry is testing swarms of UAVs [23] (up to 1,000 at once) in airshows to demonstrate their AI orchestration for advanced maneuvers. There is strong interest by the Chinese military in applying such drones in combat situations. “The attraction of mastering drone swarms resonates in both the defensive and offensive realms of warfare. They can be used as part of air defense measures in the event of an attack and a major war, being operated by another aircraft and released in mid-air by fighter jets. In an offensive scenario, smart swarms can drastically undermine and neutralize enemy defense systems, freezing defensive elements in a given battlefield and allowing for near-free movement and roaming of offensive forces.” [24] Moving from UAVs to UUVs [25] , the PLA [26] recently gave an interview in which they detailed a classified project to arm submarines with AI for “handling such tasks as surveillance, minelaying, and even attacks on enemy vessels, relying on AI to adjust to changing conditions." [27] It remains to be seen how effective these tools are, but we can be assured that China’s interest in AI and autonomous weapons is profound and its advances will continue.

Curiously, at the last UN LAWS meeting (April 2018), China endorsed a call to prohibit the use of autonomous weapons, yet they did not address their development or production. [28] This strikes some as a bit specious, given China’s already large investments into AI. By not endorsing a ban on their development or production, China might actually gain an advantage. They would appear to be playing along with the international community, yet never cease their R&D on autonomous weapons, thus retaining the option to deploy them if their senior leadership felt the need.

Russia. Russia is also aggressively pursuing AI. In a speech to school children in 2017, Vladimir Putin stated, “Artificial intelligence is the future, not only for Russia, but for all humankind. It comes with colossal opportunities, but also threats that are difficult to predict. Whoever becomes the leader in this sphere will become the ruler of the world.” [29] He further commented, “when one party's drones are destroyed by drones of another, it will have no other choice but to surrender." [30] Critically, Russia announced in 2017 that they would not be influenced by the UN bodies seeking for a ban on autonomous weapons. [31]

In July 2018, Russia released an ambitious 10-point plan for organizing its AI efforts, including military engagements. [32] Russia is particularly active in robotics:

“While the United States has been very reluctant to arm ground robots, with only one short-lived effort during the Iraq war and no developmental programs for armed ground robots, Russia has shown no such hesitation. Russia is developing a fleet of ground combat robots for a variety of missions, from protecting critical installations to urban combat. Many of Russia’s ground robots are armed, ranging from small robots to augment infantry troops to robotic tanks. How much autonomy Russia is willing to place into its ground robots will have a profound impact on the future of land warfare.” [5]

Thus, Russia is not dissuaded by calls for bans on autonomous weapons; on the contrary, it is accelerating their efforts to field these systems.

LIMITATIONS

Despite such strong nation state interest in autonomous weapons, there are still many fundamental limitations AI must overcome before science fiction dreams of weapons developers are achieved. Issues such as algorithmic bias, cybersecurity—including spoofing attacks, lack of training data, opaque algorithms, algorithmic errors, and slow compute time may hinder the more advanced versions of fully autonomous systems. Moreover, all these challenges have salience in not just military projects, but also across the full spectrum of AI applications. Financial technology (FinTech), healthcare, autonomous vehicles—these and many more uses need basic research to ensure their safety and effectiveness.

Algorithmic Bias. Until we create code that can efficiently write new code itself, algorithms will be programmed by humans, who have favoritisms and prejudices. Moreover, AI training datasets are inherently finite and can reflect gender and racial bias. For example, the Microsoft facial recognition software Rekognition was used by the ACLU (American Civil Liberties Union) in a test on Congressmen against 25,000 criminal mug shots. Bizarrely, there were 28 matches of Congressmen to criminals with a seeming preference for minorities, implying that facial recognition (face-rec) software holds racial bias and inherent errors. Amazon claims that the ACLU used the software improperly, applying only the default 80% threshold for a match. [33] Before such software can be deployed in spaces in which people’s lives are at stake, it may be warranted to step back and execute more verification and validation (V&V) of its processing abilities, test for and remove built-in bias, and get a better handle on what are deemed acceptable thresholds for success. “A technology that’s proven to vary significantly across people based on the color of their skin is unacceptable in 21st-century policing." [34] Such proofs will be especially important when face-rec is deployed in autonomous weapons, which may be making life and death decisions based on its output.

Cybersecurity. No computer algorithm is immune to hacking. An example concern for AI is that of spoofing of images to make the AI think it is seeing an image different than reality. A recent article in Science clarified the dilemma that AI researchers now confront, and that weapons developers must deal with: “Impressive advances in AI—particularly machine learning algorithms that can recognize sounds or objects after digesting training data sets—have spurred the growth of living room assistants and autonomous cars. But these AIs are surprisingly vulnerable to being spoofed.” [35] A few stickers can change a stop sign into a speed limit sign. Adding a few pixels undetectable to a human eye can change a turtle into a rifle. Thus, even though there has been incredible progress made in image analysis via AI, with profound implications for autonomous weapons, it is presently still all too easy to spoof the image and confuse the algorithms. Both improved detection and countermeasures must be researched to diminish the fallibility of AI code.

Coding Errors. A challenge with any autonomy is the sheer complexity of the code. “Sophisticated automation requires software with millions of lines of code: 1.7 million for the F-22 fighter jet, 24 million for the F-35 jet, and some 100 million lines of code for a modern luxury automobile.” [5] No single human or even team of humans can catch all the bugs in code, since even if the software appears bug-free in beta testing, when it gets out into the real world unexpected circumstances can yield surprises. Obviously, this is undesirable in a system with weapons attached to it. What is needed is not just human-developed code, but also code that can fix its own code. Researchers such as at Carnegie Mellon University are developing code that can scan other code and fix problems automatically. [36] As systems become increasingly complex, such self-correcting code will become even more critical. Research into this area needs to increase, but with the caveat that we should also have safeguards and tracking systems in place to ensure traceability of the corrections inserted anew into the web of code. It is critical to make the autonomous systems both less brittle and more verifiable.

Black Box. Any military commander should understand why actions are taken, especially if they result in loss of life or valuable physical assets. Unfortunately, AI using machine learning is mostly a black box—once a result is produced, there is no way to assess how the result was obtained. A DARPA program, Explainable AI , seeks to change that: “Dramatic success in machine learning has led to a torrent of AI applications. Continued advances promise to produce autonomous systems that will perceive, learn, decide, and act on their own. However, the effectiveness of these systems is limited by the machine’s current inability to explain their decisions and actions to human users. The DoD is facing challenges that demand more intelligent, autonomous, and symbiotic systems. Explainable AI—especially explainable machine learning—will be essential if future warfighters are to understand, appropriately trust, and effectively manage an emerging generation of artificially intelligent machine partners.” [37]

Limited Training Data. A two year old child can see a ball once and then recognize other balls as play objects forever, but an AI using classic deep learning needs thousands of examples to recognize future objects as those of the same object class. Research is underway now to diminish this need for copious data, including [as noted from [38] ]:

· Capsule Networks , which record hierarchal networks to learn with less data

· Deep Reinforcement Learning , which combines reinforcement learning with deep neural networks to learn by interacting with the environment

· Generative Adversarial Networks , which use a type of unsupervised deep learning system, implemented as two competing neural networks, enabling machine learning with less human intervention

· Lean and Augmented Data Learning, which leverages different techniques—such as transfer learning or one-shot learning—that enable a model to learn from less data or synthetic data

· Hybrid Learning Models— which combine different types of deep neural networks with probabilistic approaches to model uncertainty

Ultimately, reducing the amount of training data needed is especially critical in autonomous systems, as there are challenges in obtaining real-world data. (One would not initiate a battle just to train an AI on battlefield conditions.)

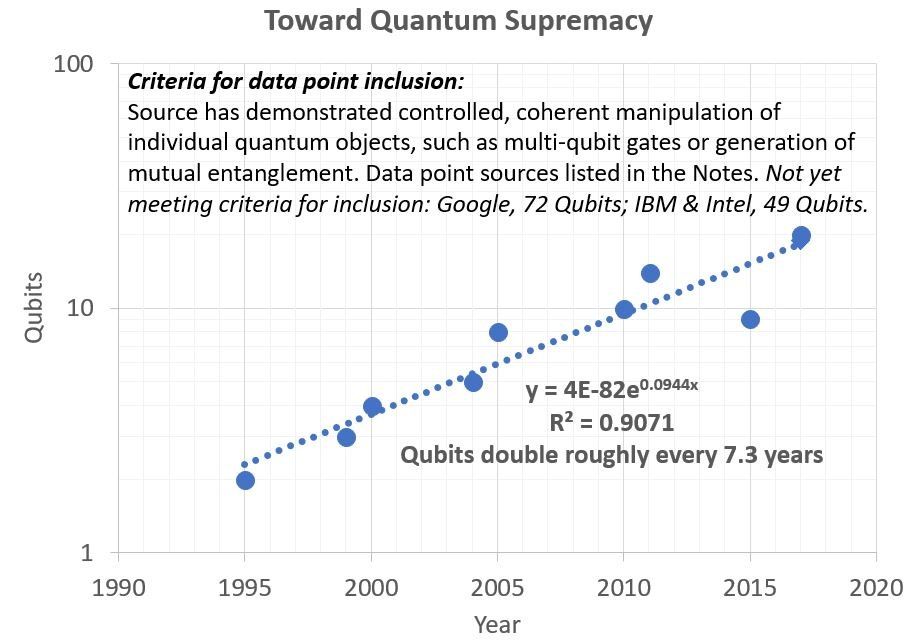

Slow Compute. When operating an autonomous system, whether a civilian autonomous vehicle or a military drone, how fast calculations can be made is a critical decider of functionality. Especially for moving objects, calculations and decisions need to be made at millisecond or faster rates to avoid collisions. “Edge computing,” which enables on-device or system computing as opposed to sending and receiving signals to and from a central compute hub such as a cloud computing center, is a strong trend in AI. Of course, computing on-device requires faster, more mobile computer packages. NVIDIA and other companies are investing heavily in edge computing to bring the calculations onto the device, and thus short-circuit the need for signal delays. [39] With the on-going trends for diversification of computer chips beyond Moore’s Law, edge computing will need to be more researched and invested in to enable autonomous systems. [40] , [41]

A MIDDLE GROUND?

Many in the AI community understandably express concern about development and deployment of autonomous weapons. Nevertheless, despite numerous calls for banning autonomous weapons, several nation states with the military will and the economic means are moving forward to create such intelligent systems.

However, numerous technical challenges exist that may compromise the functionality and effectiveness of any autonomous weapons. The aforementioned AI issues must be overcome to minimize or completely avoid weapons that are in the least worst case dysfunctional and potentially harmful even to those deploying them, and in the absolute worst case possessing potential to go rogue on their human operators.

A key question every AI researcher must ask is whether we would prefer to be on the unprotected receiving end of countries that ignored bans and moved forward in developing autonomous weapons (that may be rife with algorithmic errors, bias and other issues outlined above), or would we rather be in a carefully planned defensive position ourselves with proper safeguards and other issues addressed. A growing chorus of op-eds advocate for engagement by AI researchers with the defense community because of these concerns. [42] , [43]

History has shown that nations that fell behind in technology advancement were subsequently outmatched on the battlefield. “In 1588, the mighty Spanish Armada was defeated by the British, who had more expertly exploited the revolutionary technology of the day: cannons. In the interwar period between World War I and World War II, Germany was more successful in capitalizing on innovations in aircraft, tanks, and radio technology and the result was the blitzkrieg—and the fall of France. The battlefield is an unforgiving environment. When new technologies upend old ways of fighting, militaries and nations don’t often get second chances to get it right.” [5]

Perhaps there is a middle ground in which proponents and opponents of autonomous weapons might meet. We propose that AI researchers execute basic research —in concert with both civilian and defense agencies—for the benefit of all autonomous systems efforts. Without engaging in the actual construction of weapons, AI researchers could support the US Government in its efforts to overcome the technical issues listed above. For example, countermeasures to autonomous weapons cannot be developed without a detailed understanding of the functionality of those weapons. Many US federal agencies—DARPA, IARPA, NSF, etc. [44] —offer funding that is targeted to basic science.

Such research would benefit the wider economy, also. FinTech, health technology, autonomous vehicles, energy systems, law enforcement—these and many more AI sectors would benefit greatly from research into the topics above.

Thus, instead of carte-blanche refusals to work with the US Government on AI issues that might lead to weapons development, perhaps a middle ground exists to execute basic research and thus aid both industry and defense.

CONCLUSIONS

History will most probably repeat itself—calls for banning autonomous weapons will be overwhelmed by already significant investments, concerns over adversary actions, and potential for battlefield dominance. Well-meaning people will sign letters, resign their employment, or otherwise protest the development and use of autonomous weapons. International organizations will hold conferences and sign more comprehensive treaties. But when push comes to shove and nation states see their adversaries tooling-up or applying weaponry powered by AI and robotics, they will have little hesitation to go all-in on autonomous systems. The signs are already there with the United States, China and Russia initiating large programs. Proliferation to other countries is only a matter of time, as we have seen with other technologies (3d-printing, smartphones, etc.)

There is an inexorable push toward autonomous weapons being developed and deployed by nation states with the will and economic backing to do so. The AI community must remain diligent, and, in keeping with its own personal or organizational ethics, should consider engaging in basic science research to benefit both civilian and military applications as the technology advances.

NOTES (all links accessed August 2018)

[1] Hague Convention, https://www.britannica.com/event/Hague-Conventions

[2] A.Q. Arbuckle, “1914-1918, Balloons of World War I, https://mashable.com/2016/03/02/wwi-balloons/#tSQb8HZBe8qo

[3] Unrestricted Submarine Warfare, https://en.wikipedia.org/wiki/Unrestricted_submarine_warfare

[4] N. Eardley, “Files show confusion over Lusitania sinking account,” 1 May 2014, https://www.bbc.co.uk/news/uk-27218532

[5] Paul Scharre, “Army of None: Autonomous Weapons and the Future of War,” 2018, W. Norton & Company, Inc.

[6] “Allied submarines in the Pacific War,” https://en.wikipedia.org/wiki/Allied_submarines_in_the_Pacific_War

[7] C. Pellerin, “Deputy Secretary: Third Offset Strategy Bolsters America’s Military Deterrence”, 31 OCT 2016, https://www.defense.gov/News/Article/Article/991434/deputy-secretary-third-offset-strategy-bolsters-americas-military-deterrence/

[8] M. Rosenberg, J. Markoff, “The Pentagon’s ‘Terminator Conundrum’: Robots That Could Kill on Their Own,” 25 OCT 2016, https://www.nytimes.com/2016/10/26/us/pentagon-artificial-intelligence-terminator.html

[9] “Background on Lethal Autonomous Weapons Systems in the CCW,” https://www.unog.ch/80256EE600585943/(httpPages)/8FA3C2562A60FF81C1257CE600393DF6?OpenDocument

[10] K. Johnson, “Google CEO bans autonomous weapons in new AI guidelines,” 7 JUNE 2018, https://venturebeat.com/2018/06/07/google-ceo-bans-autonomous-weapons-in-new-ai-guidelines/

[11] M. McFarland, “Leading AI researchers vow to not develop autonomous weapons,” 18 JUL 2018, https://money.cnn.com/2018/07/18/technology/ai-autonomous-weapons/index.html

[12] “Lethal Autonomous Weapons Pledge,” https://futureoflife.org/lethal-autonomous-weapons-pledge/?cn-reloaded=1

[13] C. Pash, “These tech leaders have signed a pledge against killer robots,” 19 JUL 2018, https://www.weforum.org/agenda/2018/07/the-worlds-tech-leaders-and-scientists-have-signed-a-pledge-against-autonomous-killer-robots

[14] G.M. Del Prado, “Stephen Hawking, Elon Musk, Steve Wozniak and over 1,000 AI researchers co-signed an open letter to ban killer robots,” 27 JUL 2015, https://www.businessinsider.com/stephen-hawking-elon-musk-sign-open-letter-to-ban-killer-robots-2015-7

[15] A. Mehta, “DoD stands up its artificial intelligence hub,” 29 JUN 2018, https://www.c4isrnet.com/it-networks/2018/06/29/dod-stands-up-its-artificial-intelligence-hub/

[16] J. Doubleday, “DOD wants $75 million to establish Joint AI Center, forecasts $1.7B over six years,” 18 JUL 2018, https://insidedefense.com/daily-news/dod-wants-75-million-establish-joint-ai-center-forecasts-17b-over-six-years

[17] Command, Control, Communications, Computers, Intelligence, Surveillance and Reconnaissance

[18] “AlphaGo versus Lee Sedol,” https://en.wikipedia.org/wiki/AlphaGo_versus_Lee_Sedol

[19] R. Faruk, “Military Implications of AlphaGo,” 30 MAY 2017, http://actionablethought.com/military-implications-of-alphago/

[20] E. Kania, “China's Artificial Intelligence Revolution,” 27 JUL 2017, https://thediplomat.com/2017/07/chinas-artificial-intelligence-revolution/

[21] V. Barhat, “China is determined to steal A.I. crown from US and nothing, not even a trade war, will stop it,” 4 MAY 2018, https://www.cnbc.com/2018/05/04/china-aims-to-steal-us-a-i-crown-and-not-even-trade-war-will-stop-it.html

[22] T. Campbell, “Kardashev Scale Analogy: Long-Term Thinking about Artificial Intelligence,” 5 NOV 2017, https://www.futuregrasp.com/kardashev-scale-analogy

[23] Unmanned Aerial Vehicles

[24] S.N. Romaniuk and T. Burgers, “China’s Swarms of Smart Drones Have Enormous Military Potential,” 3 FEB 2018, https://thediplomat.com/2018/02/chinas-swarms-of-smart-drones-have-enormous-military-potential/

[25] Unmanned underwater vehicles

[26] People’s Liberation Army

[27] P. Glass, “China’s Robot Subs Will Lean Heavily on AI: Report,” 23 JUL 2018, https://www.defenseone.com/technology/2018/07/chinas-robot-subs-will-lean-heavily-ai-report/149959/?oref=d-mostread

[28] A. Conn, “Podcast: Six Experts Explain the Killer Robots Debate,” 31 JUL 2018, https://futureoflife.org/2018/07/31/podcast-six-experts-explain-the-killer-robots-debate/

[29] J. Vincent, “Putin says the nation that leads in AI ‘will be the ruler of the world’,” 4 SEP 2017, https://www.theverge.com/2017/9/4/16251226/russia-ai-putin-rule-the-world

[30] “Putin: Leader in artificial intelligence will rule world,” 4 SEP 2017, https://www.cnbc.com/2017/09/04/putin-leader-in-artificial-intelligence-will-rule-world.html

[31] P. Tucker, “Russia to the United Nations: Don’t Try to Stop Us From Building Killer Robots,” 21 NOV 2017, https://www.defenseone.com/technology/2017/11/russia-united-nations-dont-try-stop-us-building-killer-robots/142734/

[32] S. Bendett, “Here’s How the Russian Military Is Organizing to Develop AI,” 20 JUL 2018, https://www.defenseone.com/ideas/2018/07/russian-militarys-ai-development-roadmap/149900/

[33] S. Hollister, “Amazon facial recognition mistakenly confused 28 Congressmen with known criminals,” 26 JUL 2018, https://www.cnet.com/news/amazon-facial-recognition-thinks-28-congressmen-look-like-known-criminals-at-default-settings/

[34] B. Barrett, “Lawmakers Can't Ignore Facial Recognition's Bias Anymore,” 26 JUL 2018, https://www.wired.com/story/amazon-facial-recognition-congress-bias-law-enforcement/

[35] M. Hutson, “Hackers easily fool artificial intelligence,” Science , 20 July 2018, 361 (6399), pp. 215.

[36] Research at SCS, Carnegie Mellon University, https://www.cs.cmu.edu/research

[37] D. Gunning, “Explainable Artificial Intelligence (XAI),” DARPA, https://www.darpa.mil/program/explainable-artificial-intelligence

[38] D.S. Ganguly, “Top Artificial Intelligence trends in 2018-You Should Consider For AI & Machine Learning Development,” 3 JUL 2018, https://hackernoon.com/top-trends-in-2018-you-need-to-follow-for-artificial-intelligence-machine-learning-development-dfeee0eabb5f

[39] J. Markman, “This Is Why You Need To Learn About Edge Computing,” 3 APR 2018, https://www.forbes.com/sites/jonmarkman/2018/04/03/this-is-why-you-need-to-learn-about-edge-computing/#2b04f1571a56

[40] T. Campbell, R. Meagley, “Next-Generation Compute Architectures Enabling Artificial Intelligence - Part I of II,” 2 FEB 2018, https://www.futuregrasp.com/next-generation-compute-architectures-enabling-artificial-intelligence-part-I-of-II

[41] T. Campbell, R. Meagley, “Next-Generation Compute Architectures Enabling Artificial Intelligence—Part II of II,” 8 FEB 2018, https://www.futuregrasp.com/next-generation-compute-architectures-enabling-artificial-intelligence-part-II-of-II

[42] G.C. Allen, “AI researchers should help with some military work,” 6 JUN 2018, https://www.nature.com/articles/d41586-018-05364-x

[43] P. Luckey, T. Stephens, “Silicon Valley should stop ostracizing the military,” 8 AUG 2018, https://www.washingtonpost.com/opinions/silicon-valley-should-stop-ostracizing-the-military/2018/08/08/7a7e0658-974f-11e8-80e1-00e80e1fdf43_story.html?utm_term=.674b3489c79d

[44] Defense Advanced Research Project Agency, Intelligence Advanced Research Project Activity, National Science Foundation