Kardashev Scale Analogy: Long-Term Thinking about Artificial Intelligence

COSMOLOGICAL CONTEXT

Identification of the Next Big Thing is a constant pursuit among investors, researchers and industry. To catch that next funding wave, emerging technologies are often proclaimed as paradigm shifters (3D-Printing! Artificial Intelligence! The Internet of Things! Genome Editing! Nanotechnology!). Unfortunately, the press has a tendency to hype even minor discoveries or inventions as if they are widely deployed to the public and the apex of a technology is reached already. But all technologies take time to develop, and frequently they can take a long time as major advances are needed between one capability phase to the next.

Perhaps it is warranted to step back and consider the grand challenges and major technological advances needed for a technology to achieve full fruition. As a framework for this exercise, we shall leverage as an analogy the cosmic scale for hypothetical energy control proposed by the Soviet astronomer Nikolai Kardashev in 1964.

As clarified by Wikipedia [1] (and as sourced from the original publication [2] ): “The Kardashev scale is a method of measuring a civilization's level of technological advancement, based on the amount of energy a civilization is able to use for communication. The scale has three designated categories:

Type I civilization —also called a planetary civilization—can use and store all of the energy which reaches its planet from its parent star.

Type II civilization —also called a stellar civilization—can harness the total energy of its planet's parent star (the most popular hypothetical concept being the Dyson sphere—a device which would encompass the entire star and transfer its energy to the planet(s)).

Type III civilization —also called a galactic civilization—can control energy on the scale of its entire host galaxy.”

The Kardashev scale is a grand Gedankenexperiment that catalyzes thought about what humanity might be capable of perhaps hundreds of thousands, if not millions, of years from now. While certainly fun to think about galactic civilizations, could we use this model instead as a paradigm for more grounded technology that is now seeing widespread use and rapid advancement? Let’s consider in this light artificial intelligence (AI).

ARTIFICIAL INTELLIGENCE (AI) SCALE

AI is currently one of the hottest technologies in R&D and commercial applications. Numerous experts tout AI as a paradigm-shifting capability that has potential to disrupt multiple industries. There are countless AI blog posts, reports, and conferences. Technology giants, including Baidu and Google, spent between $20B to $30B on AI in 2016 alone. [3]

Despite all this interest and investment, all may not be so rosy as society rushes to adopt AI. This is all the more reason to come to a better appreciation of how AI might evolve in the near and further future. As clarified in the recent Global Trends: Paradox of Progress [4] drafted by the National Intelligence Council (NIC), Office of the Director of National Intelligence (ODNI) [5] :

" Artificial intelligence (or enhanced autonomous systems) and robotics have the potential to increase the pace of technological change beyond any past experience, and some experts worry that the increasing pace of technological displacement may be outpacing the ability of economies, societies, and individuals to adapt. Historically, technological change has initially diminished but then later increased employment and living standards by enabling the emergence of new industries and sectors that create more and better jobs than the ones displaced. However, the increased pace of change is straining regulatory and education systems’ capacity to adapt, leaving societies struggling to find workers with relevant skills and training."

Some may think AI is a ‘new’ technology as it only just in the last few years became widely recognized, but it has been around for decades. In 1950, Alan Turing proposed the capability to encode intelligence into a machine. [6] Following several decades of excitement and disappointment (including at least two AI ‘winters’ in which funding and researchers dried up due to a failure of AI to live up to its expectations), there was a step-jump in AI capabilities in 2012. During an imaging contest using a large database, the “Imagenet,” one team successfully leveraged large computational power to improve facial recognition accuracy dramatically. As clarified by MIT Technology Review:

"This was the first time that a deep convolutional neural network had won the competition, and it was a clear victory. In 2010, the winning entry had an error rate of 28.2 percent, in 2011 the error rate had dropped to 25.8 percent. But SuperVision won with an error rate of only 16.4 percent in 2012 (the second best entry had an error rate of 26.2 percent). That clear victory ensured that this approach has been widely copied since then." [7]

Since that early successful deployment of deep learning (DL), AI researchers have rushed to deploy DL in almost every market sector. Financial services, marketing, transportation, education, the medical industry, and others—all are being assessed now to make processes more efficient, faster and more cost effective. [8] Intense interest is leveled on AI R&D and the competition for talent is fierce. [9]

While some argue that AI research has actually plateaued (can’t we accomplish more than just another minor tweak on current DL algorithms?), others debate that we’ve only just begun (but what if we successfully map the brain?). Irrespective of who is right and how rapidly AI develops, advancements in AI will continue apace. Many countries and companies have multi-billion dollar AI initiatives. [10]

Although it is certainly exciting to have broad discussions about autonomous vehicles [11] and better chatbots to help us choose that optimal shopping item [12] , where might we see AI developing long-term? To get a sense of what might come in the future with AI, we propose here a set of advancement levels (‘Types’) of AI, following the pattern earlier noted in the Kardashev Scale. In an attempt to remain at least partially contemporary, we add a Type 0 to our hypothetical AI scale.

Type 0 AI—also called Narrow AI: An AI demonstrates near-perfect single-task capabilities – e.g., speech recognition, search engines, machine-composed news reports.

Type I AI—also called Enhanced Narrow AI: An AI demonstrates capabilities overall approaching that of a human - e.g., Level III-IV autonomous vehicles, conversational chatbots essentially unrecognizable from real humans, robots capable of mimicking the mobility of a human or other animals.

Type II AI—also called General AI: An AI is proven to be able to fully mimic all human capabilities - including planning, memory, analysis, and five and more senses.

Type III AI—also called Superintelligence: An AI is proven to far exceed human capabilities and is capable of continuous self-improvement (self-sensing and self-reprogramming); its IQ would be so high as to be immeasurable.

Like the Kardashev Scale for energy, the leaps needed between Types of AI might be considered large. However, the point of this article is not to daunt us into considering such steps impossible, but rather to take a step back from daily AI research to consider where we might be headed. Is achieving Type III AI as here outlined possible? How might the field of AI transition from our current ‘Narrow AI’ to that of Superintelligence?

POSSIBLE EVOLUTION OF AI

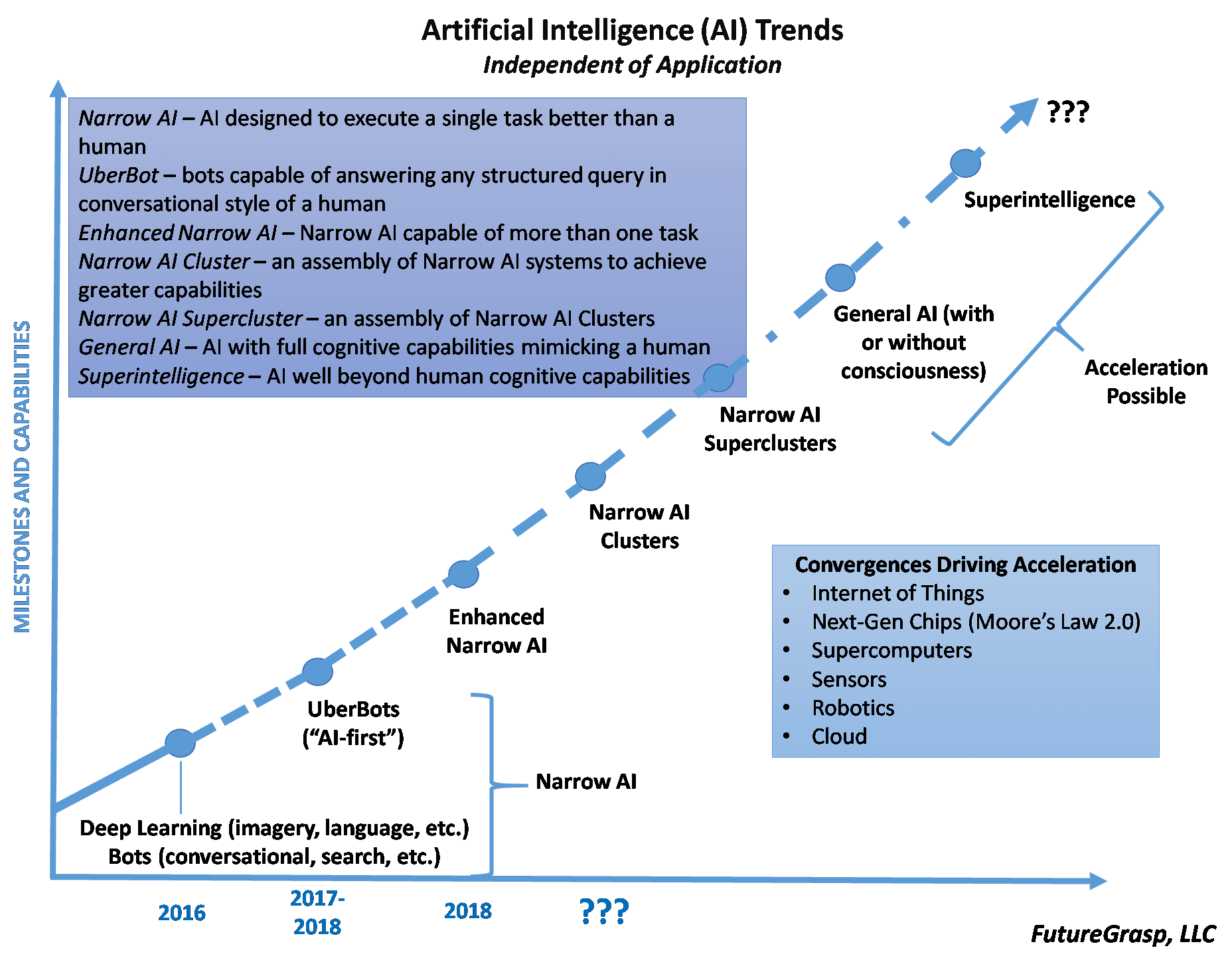

To help us in our thought process regarding AI development, we propose further a rough map from Narrow AI to Superintelligence, overlaid with outside influences of other technologies that might accelerate AI R&D and capabilities; see figure.

Presently, we are in the age of Narrow AI . DL and Bots are becoming standard in many applications – e.g., Apple’s Siri, Google’s search engine. Several companies are now championing an “AI-first” approach to technology—e.g., Amazon, Google, Baidu. These investments may enable us to move up the curve in the next two years toward UberBots —algorithms, such as those in Amazon’s Alexa or Google’s Home, capable of answering any structured query in a conversational style of a human. Already in China the company Xiaoi uses a conversational chatbot that millions use daily. “Xiaoi [is] a conversational AI giant whose bots — deployed to almost every business sector in China — have engaged 500 million users and processed more than 100 billion conversations. Xiaoi bots take on different roles in finance, automotive, telecommunications, e-commerce, and other industries, serving many Fortune 100 companies.” [13]

As these capabilities become more advanced, we may see the Type I AI listed above, Enhanced Narrow AI . Using an autonomous vehicle as an example, an Enhanced Narrow AI may be capable of not only driving with minimal human interaction, but also simultaneously navigating, booking a table at your favorite restaurant, helping you answer your email, etc., all while you dodge city traffic with only a wary eye and a readiness to assume controls if the AI fails to anticipate an accident.

Beyond Enhanced Narrow AI, we propose here the concepts of Narrow AI Cluster and Narrow AI Supercluster . Harkening back to the cosmological analogy earlier, an observation in astronomy that can be leveraged is that of a galactic cluster and galactic superclusters, groupings of galaxies that move together through the universe. [14] Positioning Narrow AIs into more powerful programming clusters may offer more capabilities than distinct Narrow AIs themselves. One can then envision that Narrow AI Clusters and Narrow AI Superclusters could push us into Levels IV and V autonomous vehicles that could take over all driving responsibilities, while providing one’s favorite entertainment, a private work space in the car, and other amenities now found at best in a well-appointed hotel room.

General AI may be the most difficult Type to achieve, and also the most difficult to recognize. A fascinating development in AI research is the debate among ethics experts and philosophers on what characteristics would be needed to have an AI qualify as General AI. Some thinkers take the position that General AI would only be achieved if the AI becomes conscious or self-aware. [15] We take the tact here that General AI could be achieved with or without actual machine consciousness.

As an analogy, one may debate whether animals are truly conscious. Do they recognize the passage of time beyond base instinctual reactions to weather and seasons? Do they recognize themselves as separate organisms with potential for independent actions? However one answers these questions—and the answers may be species-dependent—one would be hard-pressed to argue that animals are not adapted to their particular environment and their survival. Thus, they are core actors within their respective ecologies, whether or not they are self-aware.

Such may be the same with a General AI. Would a General AI with all the trappings of clusters of Narrow AIs, even without consciousness, be indistinguishable from a human? Would it not also dramatically influence our environment? A recent publication in Science explores this point:

"We contend that a machine endowed with C1 [i.e., global availability consciousness, in which there exists a relationship between a cognitive system and a specific object of thought – ‘Information that is conscious in this sense becomes globally available to the organism; for example, we can recall it, act upon it, and speak about it.’] and C2 [i.e., self-monitoring consciousness, ‘…a self-referential relationship in which the cognitive system is able to monitor its own processing and obtain information about itself.’] would behave as though it were conscious; for instance, it would know that it is seeing something; would express confidence in it, would report it to others, could suffer hallucinations when its monitoring mechanisms break down, and may even experience the same perceptual illusions as humans." [16]

Superintelligence may happen soon after General AI is created. Several labs are now working on training an AI to self-program (equivalent to self-healing or exercise for humans). Google just recently announced they have an AI, AutoML, that is “creating more powerful, efficient systems than human engineers can.” [17] Some philosophers believe that the leap from General AI to Superintelligence will be so fast that humans may not even recognize that it is happening. A General AI could recognize its foibles, correct them, and new AI could then improve itself in a rapid cycle that could culminate in an AI vastly superior in intelligence and capabilities to that of even a genius human. Where superintelligence might lead society is anybody’s guess, but we can be assured that having a superintelligence could dramatically change how we exist as humans. [18] Intractable problems such as finding the optimal drug may become child’s play for an AI, and even grand challenges such as the UN Millennium Development Goals [19] may be solved. There is no precedent for what a superintelligence (or cluster of superintelligences) might mean.

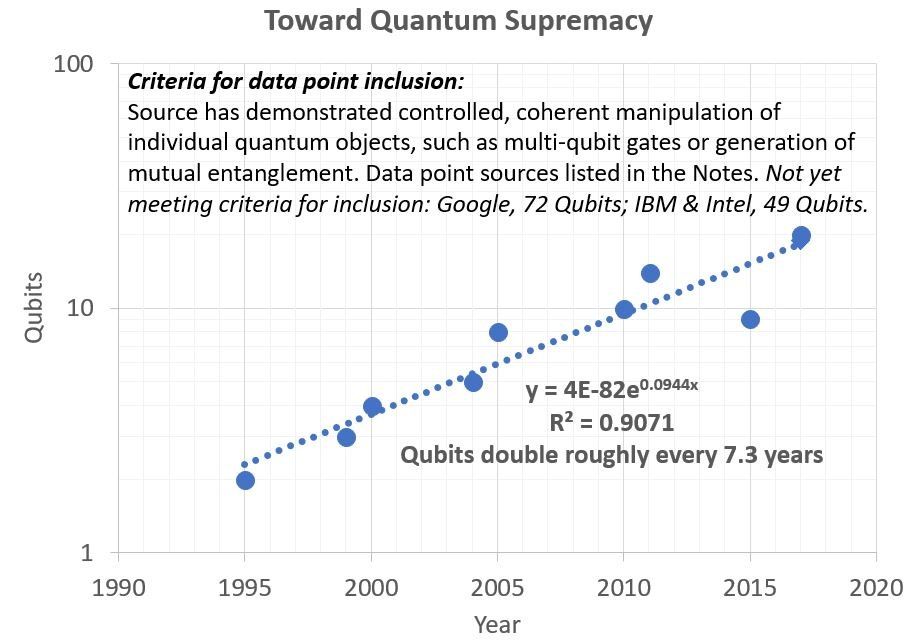

Ultimately, it is impossible to predict how quickly this journey might occur—ergo the ??? on the graph’s abscissa. However, we should not look at AI in a silo. Many other technologies could accelerate the development of AI, as is listed in the textbox Convergences Driving Acceleration . The Internet of Things, assuming proper standards are put in place for data transfer and successful cybersecurity, could provide exabytes or more of additional data upon which an AI might learn.Trillions of sensors, coupled with robotics and the cloud, would feed AI vast datasets.Next-generation chips (e.g., graphical processing units, GPUs; quantum computing designed for AI) are already being designed by multiple companies specifically for AI. Nations are in a race for the world’s fastest supercomputers, which themselves may be applied to create new genres of AI. It is crucial AI researchers keep an eye on all these and other fields to best leverage their capabilities.

SO WHERE ARE WE ON THE AI SCALE?

Clearly, we are low on the Kardashev Scale now in terms of cosmological expansion and energy control; we humans are not even at Type I yet. Unfortunately, we are on a similar low level with our proposed AI Scale, hovering somewhere around Type 0 AI. However, we may not stay at that level for long with the many billions of dollars now invested into AI R&D, intense interest in AI education, and numerous large technology companies betting their future on AI.

The mapping above of a possible path from our current Narrow AI to Superintelligence is just one means by which we may create an intelligence orders of magnitude more capable than ourselves. Regardless of how we get to General AI or Superintelligence, the future will most probably will have much AI in it.

CONCLUSIONS

While we may be millennia away from controlling all the energy in our galaxy, significant advances can still be made in technologies such as AI to lead us into longer, more productive lives. Recognizing the major milestones needed and possible pathways toward achieving them can offer crucial insights to researchers and policymakers.

As with all technology, what may seem improbable today may become common in the future. Ultimately, we should not shirk from research challenges that are hard, but embrace them with our end goals in mind. As the Norwegian explorer and Nobel Peace Prize winner, Fridtjof Nansen, said, “ The difficult is what takes a little time; the impossible is what takes a little longer.”

REFERENCES

[1] “Kardashev Scale,” https://en.wikipedia.org/wiki/Kardashev_scale , access 10/17/17.

[2] Kardashev, Nikolai (1964). "Transmission of Information by Extraterrestrial Civilizations". Soviet Astronomy. 8: 217.

[3] “McKinsey's State Of Machine Learning And AI, 2017,” July 9, 2017,

http://www.forbes.com/sites/louiscolumbus/2017/07/09/mckinseys-state-of-machine-learning-and-ai-2017/ , accessed 10/17/17.

[4] Global Trends: Paradox of Progress, January 2017, https://www.dni.gov/files/documents/nic/GT-Full-Report.pdf , accessed 10/17/17.

[5] The author was the first National Intelligence Officer for Technology with the NIC from February 2015 to August 2017.

[6] A. M. Turing (1950) Computing Machinery and Intelligence. Mind 49: 433-460.

[7] “The Revolutionary Technique That Quietly Changed Machine Vision Forever,” September 9, 2014, https://www.technologyreview.com/s/530561/the-revolutionary-technique-that-quietly-changed-machine-vision-forever/ , accessed 10/17/17.

[8] For example, see the portfolio investments of Bootstrap Labs, https://bootstraplabs.com/ , accessed 10/17/17.

[9] “Tech Giants are Paying Huge Salaries for Scarce A.I. Talent,” October 22, 2017, https://www.nytimes.com/2017/10/22/technology/artificial-intelligence-experts-salaries.html , accessed 11/1/17.

[10] Leading nations have multi-billion dollar AI initiatives, including China. In the United States, industry invests far more than the federal government.

[11] Autonomous vehicles have five recognized levels of autonomy—Level I would be on the order of existing cruise control, whereas Level V would mean full autonomy with no drive intervention required. https://web.archive.org/web/20170903105244/https://www.sae.org/misc/pdfs/automated_driving.pdf , accessed 10/17/17.

[12] “How AI Will Change Amazon: A Thought Experiment,” October 3, 2017, https://hbr.org/2017/10/how-ai-will-change-strategy-a-thought-experiment?imm_mid=0f734b&cmp=em-data-na-na-newsltr_ai_20171016 , accessed 11/1/17.

[13] “The Most Successful Bot Company You’ve Never Heard Of,” July 13, 2017,

https://www.topbots.com/xiaoi-bot-chatbot-china-ai-technology/ , accessed 10/17/17.

[14] The Milky Way is part of the Local Group galaxy cluster (that contains more than 54 galaxies), which in turn is part of the Laniakea Supercluster. This supercluster spans over 500 million light-years, while the Local Group spans over 10 million light-years. The number of superclusters in the observable universe is estimated to be 10 million. https://en.wikipedia.org/wiki/Supercluster#cite_note-2 , accessed 10/17/17.

[15] Of course, consciousness itself is rather ill-defined and its fundamental characteristics engender fierce debate among philosophers.

[16] S. Dahaene, H. Lau, S. Kouider, “What is consciousness, and could machines have it?,” Science 358, 486-492, 27 October 2017.

[17] “Google’s Machine Learning Software Has Learned to Replicate Itself, October 16, 2017,

https://futurism.com/googles-machine-learning-software-has-learned-to-replicate-itself/ , accessed 10/17/17.

[18] For a full treatment of this issue, see “Superintelligence: Paths, Dangers and Strategies,” Nick Bostrom, Oxford University Press, 2016.

[19] “Millennium Development Goals and Beyond 2015,” http://www.un.org/millenniumgoals/ , accessed 10/17/17.